728x90

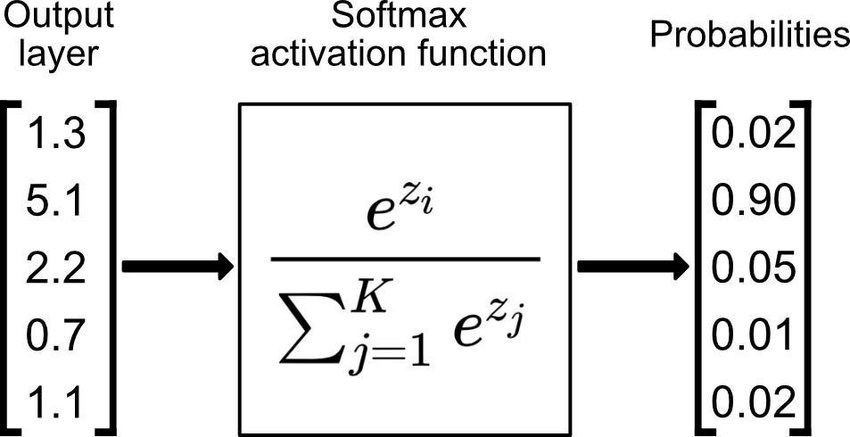

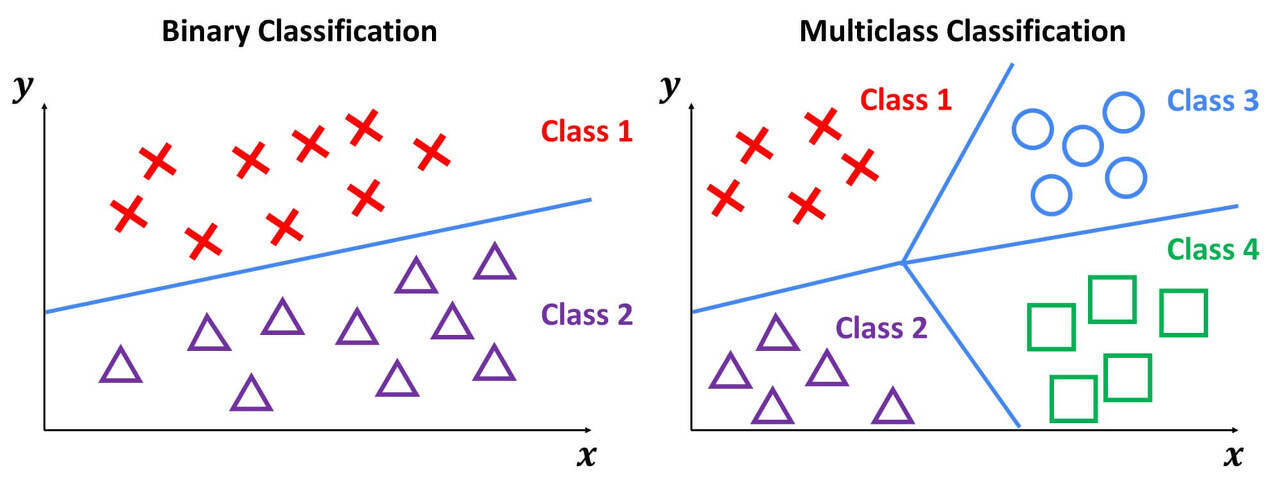

Softmax Classifiation : 주어진 입력에 따라 3개 이상의 class에서의 예측

(=Multi Classification)

Linear : H(x) ∈ (-inf, inf)

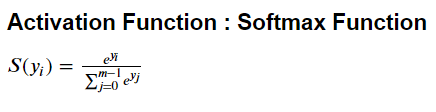

Softmax : H(x) ∈ [0,1]

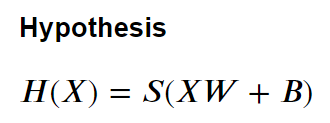

Step1) Hypothesis

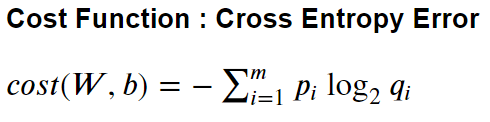

Step2) Cost function

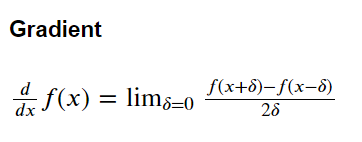

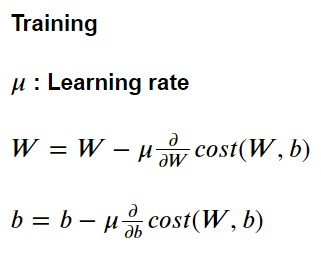

Step3) Training - gradient descent method

Tensorflow

w/ min-max scaling

#load module

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

#input & label

x_input = tf.constant([[25,22],[25,26],[25,30],[35,22],[35,26],[35,30],[45,22],

[45,26],[45,30],[55,22],[55,26],[55,30],[65,22],[65,26],[65,30],[73,22],[73,26],[73,30]], dtype= tf.float32)

labels = tf.constant([[1,0,0],[0,1,0],[0,0,1],[1,0,0],[0,1,0],[1,0,0],[1,0,0],

[1,0,0],[0,1,0],[1,0,0],[0,1,0],[0,0,1],[0,1,0],[0,0,1],[0,0,1],[0,1,0],[0,0,1],[0,0,1]], dtype= tf.float32)

# 나이와 BMI 가 입력되면, 혈압등급을 0 : 정상, 1: 주의, 2: 경고 등급으로 구분하는 코드를 구현

#weight and bias

n_var, n_class = 2, 3

W = tf.Variable(tf.random.normal((n_var, n_class),dtype=tf.float32))

B = tf.Variable(tf.random.normal((n_class,),dtype=tf.float32))

#min-max scaling

x_input_org = x_input

xmin, xmax = np.min(x_input, axis = 0), np.max(x_input, axis = 0)

x_input = (x_input - xmin)/(xmax-xmin)

#Function Generation

def logits(x):

return tf.matmul(x,W) + B

def Hypothesis(x):

return tf.nn.softmax(logits(x))

def Cost():

logit_value = logits(x_input)

return tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=logit_value, labels=labels))

def Predict(x):

return Hypothesis((x-xmin)/(xmax-xmin))

#Parameter

epochs = 10000

lr = 0.5

opt = tf.keras.optimizers.SGD(learning_rate = lr)

training_idx = np.arange(0, epochs+1, 1)

cost_graph = np.zeros(epochs+1)

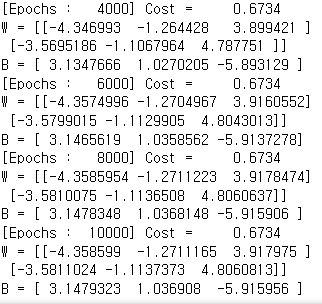

#Training

for cnt in range(0, epochs+1):

cost_graph[cnt] = Cost()

if cnt % (epochs//5) == 0:

print("[Epochs : {:>6}] Cost = {:>10.4}".format(cnt, cost_graph[cnt]))

print("W = {:}".format(W.numpy()))

print("B = {:}".format(B.numpy()))

opt.minimize(Cost, [W,B])

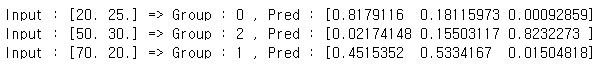

#Prediction (using input value)

test_set = tf.constant([[20, 25], [50, 30],[70, 20]], dtype = tf.float32)

Hx = Predict(test_set)

H = np.argmax(Hx, axis = 1)

print(Hx, '\n')

for i in range(test_set.shape[0]):

print("Input : {} => Group : {} , Pred : {}".format(test_set[i], H[i], Hx[i]))

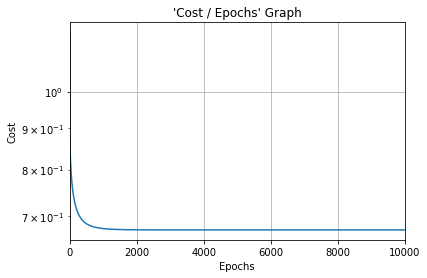

#Cost function graph

plt.title("'Cost / Epochs' Graph")

plt.xlabel("Epochs")

plt.ylabel("Cost")

plt.plot(training_idx, cost_graph)

plt.xlim(0, epochs)

plt.grid(True)

plt.semilogy()

plt.show()

728x90

'Machine Learning' 카테고리의 다른 글

| Supervised Learning - Softmax Classification (multi-variable 1) (0) | 2023.08.06 |

|---|---|

| Supervised Learning - Logistic Regression (multi-variable 2) (0) | 2023.08.06 |

| Supervised Learning - Logistic Regression (multi-variable 1) (0) | 2023.08.05 |

| Supervised Learning - Linear Regression (multi-variable 2) (0) | 2023.08.05 |

| Supervised Learning - Linear Regression (multi-variable 1) (0) | 2023.08.05 |